The Cloud Native Computing Foundation (CNCF) has emerged as a game-changer, providing a comprehensive suite of tools and technologies that empower developers to build, deploy, and manage scalable, resilient, and cost-effective web applications.

Cloud-native development has the concept of microservices, an architectural pattern that decomposes applications into loosely coupled, independently deployable services. This modular approach fosters agility, enabling developers to make rapid changes and seamlessly integrate new features without disrupting the entire application. CNCF tools seamlessly facilitate this microservices architecture, providing a rich ecosystem of technologies that streamline the development, deployment, and management of web applications.

Benefits of using CNCF tools

Enhanced Agility and Rapid Iteration

CNCF tools foster a culture of agility and rapid iteration, enabling developers to swiftly adapt to changing market demands and customer needs. The microservices architecture, a cornerstone of cloud-native development, promotes modularity and self-contained services, allowing developers to make independent changes without disrupting the entire application. This granular approach facilitates rapid feature deployment and experimentation, ensuring that web applications remain competitive and responsive in the ever-evolving digital landscape.

Unparalleled Scalability and Elasticity

CNCF tools empower developers to build web applications that can seamlessly scale up or down to meet fluctuating demands. Kubernetes, the orchestration platform at the heart of CNCF, automates the management of containerized applications, ensuring that resources are allocated efficiently and elastically. This dynamic scaling capability ensures that web applications can handle surges in traffic without compromising performance or incurring excessive costs.

Exceptional Resilience and Fault Tolerance

CNCF tools instill resilience and fault tolerance into web applications, minimizing downtime and ensuring uninterrupted service delivery. Microservices architecture, coupled with Kubernetes’ self-healing mechanisms, enables web applications to gracefully handle failures, isolating incidents and preventing them from cascading into broader outages. This robust approach ensures that web applications remain available and accessible, even in the face of unforeseen disruptions.

Reduced Operational Costs and Streamlined Management

CNCF tools streamline the deployment, management, and monitoring of web applications, leading to significant reductions in operational costs. Automation and self-service capabilities eliminate the need for manual intervention, freeing up IT teams to focus on higher-value activities. Additionally, CNCF tools provide comprehensive monitoring and observability, enabling teams to proactively identify and resolve issues before they impact users.

Enhanced Security and Compliance

CNCF tools promote robust security practices and compliance throughout the web application development lifecycle. Containerization, a key tenet of cloud-native development, isolates applications and their dependencies, reducing the attack surface and minimizing security vulnerabilities. Additionally, CNCF tools provide integrated security features, such as access control and intrusion detection, further safeguarding web applications from cyber threats.

Accelerated Innovation and Competitive Advantage

By embracing CNCF tools, web development teams can accelerate innovation and gain a competitive edge in the digital marketplace. The agile and scalable nature of cloud-native applications enables organizations to swiftly adapt to emerging technologies and market trends, rapidly introducing new features and functionalities that capture customer attention. This commitment to innovation drives business growth and solidifies a competitive position in the ever-changing digital landscape.

Challenges and Considerations for Microservices Adoption

Increased Complexity and Interdependencies

While microservices offer the promise of modularity and independent development, they also introduce a higher degree of complexity compared to traditional monolithic applications. With multiple services interacting and communicating, managing dependencies and ensuring seamless integration becomes a critical challenge. This complexity can lead to increased development time and ongoing maintenance overhead.

Distributed System Management and Observability

Microservices introduce the intricacies of managing a distributed system, where each service acts as an independent unit. This distributed nature necessitates robust monitoring and observability tools to track service health, identify performance bottlenecks, and proactively address potential issues. Without proper observability, troubleshooting and resolving incidents can become exceedingly difficult.

Data Consistency and Transaction Management

Maintaining data consistency across multiple microservices can pose a significant challenge. Traditional ACID (Atomicity, Consistency, Isolation, Durability) transactions become more complex in a distributed environment, requiring careful design and implementation to ensure data integrity. Saga patterns and event sourcing are two approaches that can address this challenge.

Security and Access Control in a Microservices Landscape

Securing a microservices architecture requires a comprehensive approach that extends beyond individual services. As each service exposes its own interface, the attack surface expands, making it crucial to implement robust authentication, authorization, and access control mechanisms. Additionally, microservices should be containerized and deployed in secure environments to minimize security vulnerabilities.

Team Structure and Communication in a Microservices Environment

Adopting a microservices architecture often necessitates changes in team structure and communication patterns. As services become more independent, teams need to adapt to a more decentralized approach, with clear ownership and responsibilities for each service. Effective communication and collaboration among these teams are essential for successful microservices development and management.

Testing and Deployment Strategies for Microservices

Testing microservices presents unique challenges due to their distributed nature and complex interactions. Automated testing strategies, including unit testing, integration testing, and end-to-end testing, are crucial for ensuring the quality and reliability of microservices. Additionally, deployment strategies need to be carefully considered, as rolling updates and canary releases become more relevant in a microservices environment.

Continuous Integration and Continuous Delivery (CI/CD) for Microservices

The adoption of CI/CD practices is essential for effectively developing and managing microservices. CI/CD pipelines automate the process of building, testing, and deploying microservices, enabling rapid iteration and continuous improvement. This automation streamlines the development process and reduces the risk of introducing bugs or regressions.

Skillset Development and Organizational Culture

Successfully adopting a microservices architecture requires a workforce with the necessary skills and mindset. Developers need to be proficient in microservice design patterns, containerization technologies, and distributed systems concepts. Additionally, a DevOps culture that fosters collaboration, automation, and continuous improvement is essential for effective microservices implementation.

Must read: Choose the Right Microservices Architecture for Your Web Application

Microservices Architecture in Cloud Native Development

Understanding Microservices Architecture

In essence, microservices architecture breaks down large, complex applications into a collection of smaller, independent services. Each microservice is responsible for a specific business function and communicates with other services through well-defined interfaces. This modular approach offers several distinct advantages over traditional monolithic applications:

Enhanced Agility and Faster Development:

Microservices architecture promotes agility by enabling independent development and deployment of individual services. This allows teams to work on different aspects of the application simultaneously, significantly reducing development cycles and time to market.

Improved Scalability and Elasticity:

Microservices can be scaled independently, allowing applications to seamlessly adapt to fluctuating demand. Scaling up or down specific services without affecting the entire application ensures optimal resource utilization and cost-effectiveness.

Fault Isolation and Resilience:

Microservices are self-contained and isolated from each other, preventing a failure in one service from cascading into the entire application. This resilience ensures high availability and reduces the overall impact of downtime.

Technology Heterogeneity and Innovation:

Microservices architecture embraces technology heterogeneity, allowing different teams to use the most suitable programming languages and frameworks for each service. This flexibility fosters innovation and enables rapid adoption of new technologies.

Microservices and the Cloud Native Approach

Microservices architecture aligns seamlessly with the cloud-native approach, which emphasizes the development and deployment of applications that are optimized for cloud environments. Cloud-native applications are characterized by their containerization, statelessness, and ability to leverage cloud services for elastic scaling and resource management.

Microservices architecture perfectly complements these cloud-native principles by providing a granular and decentralized approach to application development. The self-contained nature of microservices makes them ideal for containerization, while their stateless design aligns with the cloud-native emphasis on resilience and elastic scaling.

Challenges and Considerations for Microservices Adoption

While microservices architecture offers numerous benefits, adopting this approach also presents certain challenges that need to be carefully considered:

Increased Complexity and Interdependencies:

Managing a distributed system of microservices introduces a higher degree of complexity compared to monolithic applications. Interdependencies between services, data consistency, and distributed system management require careful design and implementation.

Monitoring and Observability:

Effectively monitoring and observing the health and performance of microservices is crucial for maintaining application stability. Robust monitoring tools are essential for identifying and resolving issues before they impact users.

Testing and Deployment Strategies:

Testing microservices requires a comprehensive approach that encompasses unit testing, integration testing, and end-to-end testing. Deployment strategies need to be tailored to handle rolling updates and canary releases in a microservices environment.

Organizational and Cultural Shifts:

Adopting microservices often necessitates changes in team structure and communication patterns. Teams need to adapt to a more decentralized approach, with clear ownership and responsibilities for each service. A DevOps culture that embraces collaboration and continuous improvement is essential for successful microservices implementation.

Kubernetes

Kubernetes, often abbreviated as K8s, is a portable container orchestration platform that facilitates the automation of containerized application deployment, scaling, and management. It acts as a control plane, managing the lifecycle of containerized applications across a cluster of machines.

Core Components of Kubernetes

Kubernetes is composed of several key components that work in harmony to orchestrate containerized applications:

Kubelet:

The kubelet is an agent that runs on each node in the Kubernetes cluster. It is responsible for receiving instructions from the control plane and executing them to manage containers on the node.

Kube-proxy:

The kube-proxy is responsible for implementing network rules and routing traffic to the appropriate containers within the cluster.

API Server:

The API server is the central component of Kubernetes, exposing an API that allows users to interact with the cluster and manage its resources.

Controller Manager:

The controller manager is responsible for maintaining the desired state of the cluster by monitoring and updating resources based on the configuration specified in the API server.

Scheduler:

The scheduler assigns pods, the basic units of deployment in Kubernetes, to nodes in the cluster based on various criteria, such as resource availability and affinity/anti-affinity rules.

Automating Application Deployment, Scaling, and Management

This automates several critical aspects of containerized application management, including:

Deployment:

Kubernetes simplifies application deployment by defining declarative manifests that describe the desired state of the application. The controller manager then takes care of creating and managing the necessary pods and containers to achieve the desired state.

Scaling:

Kubernetes automatically scales applications based on predefined metrics, such as CPU or memory usage, ensuring that resources are allocated efficiently and that applications can handle fluctuating demand.

Self-healing:

Kubernetes implements self-healing mechanisms to handle failures and maintain application availability. If a container fails, the controller manager will automatically restart it, ensuring that the application remains functional.

Kubernetes and the Cloud Native Landscape

Kubernetes has become the de facto standard for container orchestration in the cloud-native ecosystem, being used by organizations of all sizes to manage their cloud-based applications. Its popularity stems from its ability to provide a unified platform for managing containerized applications across different cloud environments, including public clouds, private clouds, and on-premises infrastructure.

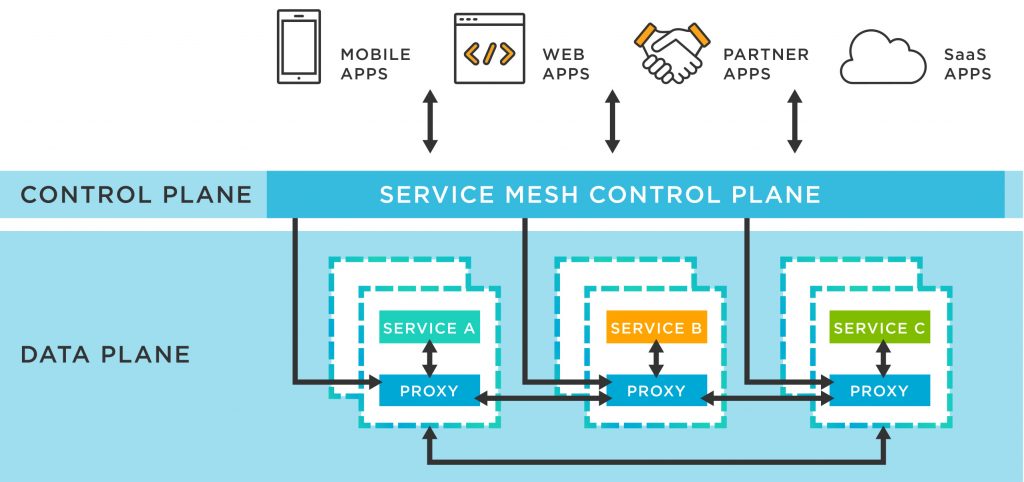

Service Meshes

Source: Tibco

A service mesh is a software layer that sits alongside microservices and provides a set of capabilities for managing communication between services, such as:

Service discovery:

Automatically discovering and registering services within the microservices ecosystem.

Load balancing:

Distributing traffic across multiple instances of a service to optimize resource utilization and handle load spikes.

Health checks:

Monitoring the health of services and identifying and addressing failures promptly.

Circuit breaking:

Temporarily isolating unhealthy services to prevent cascading failures.

Traffic management:

Implementing routing rules, traffic shaping, and access control policies to control how services interact.

Observability:

Gathering metrics, logs, and traces from services to gain insights into application behavior and identify performance bottlenecks.

Significance of Service Meshes in Cloud-Native Development

Service meshes play a crucial role in cloud-native development by addressing several challenges that arise in microservices architecture:

Simplified Communication Management:

Service meshes offload the complexity of service-to-service communication from individual services, providing a centralized and standardized approach to managing traffic, routing, and security policies.

Enhanced Observability and Troubleshooting:

Service meshes provide comprehensive observability capabilities, enabling developers and operations teams to monitor the health, performance, and interdependencies of microservices effectively.

Resilient Microservices Architecture:

Service meshes implement fault tolerance mechanisms, such as circuit breaking and retries, to ensure that microservices can gracefully handle failures and maintain application availability.

Popular Service Mesh Solutions

Several service mesh solutions have emerged in the cloud-native landscape, each with its own strengths and features:

Istio:

Istio is a powerful and feature-rich service mesh from Google that provides a comprehensive set of capabilities for managing microservices communication and observability. It is widely used in production environments and is supported by a large community.

Linkerd:

Linkerd is a lightweight and easy-to-use service mesh that emphasizes simplicity and performance. It is a popular choice for organizations seeking a straightforward approach to service mesh implementation.

Features and Benefits of Service Meshes

Service meshes offer a range of features and benefits for microservices communication and observability:

Consistent Communication and Policy Enforcement:

Service meshes enforce consistent communication patterns and policies across all microservices, ensuring a standardized approach to inter-service interaction.

Decoupled Communication from Services:

Offloading communication management to a separate layer reduces the complexity of microservices and allows developers to focus on business logic.

Granular Traffic Control and Routing:

Service meshes provide granular control over traffic flow, enabling routing rules, traffic shaping, and access control policies to be defined and enforced centrally.

End-to-End Observability and Insights:

Service meshes gather metrics, logs, and traces from services, providing a holistic view of application behavior and enabling troubleshooting and performance optimization.

Fault Tolerance and Resilience:

Service meshes implement fault tolerance mechanisms to isolate failures and prevent cascading disruptions, ensuring high availability of microservices applications.

Monitoring and Observability with Prometheus and Grafana

Prometheus: A Metric Collection Powerhouse

Prometheus stands as a powerful metric collection engine, designed to gather time-series data from various sources, including application metrics, infrastructure metrics, and external data sources. It excels at collecting and storing metrics with high precision and efficiency, making it a popular choice for monitoring cloud-native applications.

Prometheus’s key features include:

Pull-based data collection:

Prometheus proactively pulls metrics from defined targets, ensuring that data collection is reliable and resilient.

PromQL, a powerful query language:

Prometheus provides a rich query language, PromQL, that allows users to filter, aggregate, and analyze collected metrics.

Alerting capabilities:

Prometheus supports alerting based on defined thresholds, enabling proactive identification of potential issues.

Grafana: Transforming Metrics into Insights

Grafana, a versatile visualization tool, complements Prometheus by transforming collected metrics into insightful dashboards and visualizations. It provides a user-friendly interface for creating and customizing dashboards, allowing users to monitor application health, identify performance bottlenecks, and track key trends.

Grafana’s key features include:

Rich visualization options:

Grafana offers a variety of visualization types, including line graphs, bar charts, heatmaps, and annotations, to effectively represent metrics.

Customizable dashboards:

Grafana allows users to create and customize dashboards, combining different visualizations and data sources to tailor monitoring to specific needs.

Alerting integration:

Grafana seamlessly integrates with Prometheus’s alerting capabilities, providing visual notifications for critical events.

Monitoring and Troubleshooting with Prometheus and Grafana

The combination of Prometheus and Grafana provides a powerful toolkit for monitoring and troubleshooting cloud-native applications. Prometheus efficiently collects metrics, while Grafana transforms them into actionable insights. This combination enables users to:

Monitor application health:

Track key metrics like CPU utilization, memory usage, and response times to assess application health and identify potential issues.

Identify performance bottlenecks:

Analyze metrics to identify performance bottlenecks, such as slow database queries or inefficient code segments, and optimize application performance.

Troubleshoot incidents:

Correlate metrics with events and logs to pinpoint the root cause of incidents and resolve them effectively.

Gain deeper insights into application behavior:

Analyze trends, patterns, and anomalies in metrics to gain a deeper understanding of application behavior and make informed decisions.

In a Nutshell

By embracing cloud-native principles, adopting best practices, and utilizing the right tools, organizations can harness the power of cloud-native technologies to build and manage modern web applications that deliver exceptional user experiences, meet business objectives, and achieve a competitive edge in the digital marketplace.

Partnering with GeekyAnts, a leading provider of cloud-native consulting and development services, can accelerate your cloud-native journey and ensure the successful implementation of cloud-native applications. With a team of experienced cloud-native engineers, GeekyAnts provides comprehensive cloud-native solutions, from design and development to deployment and management. Contact GeekyAnts today to unleash the true potential of cloud-native applications and transform your digital presence.